AI/ML Tagging

Overview

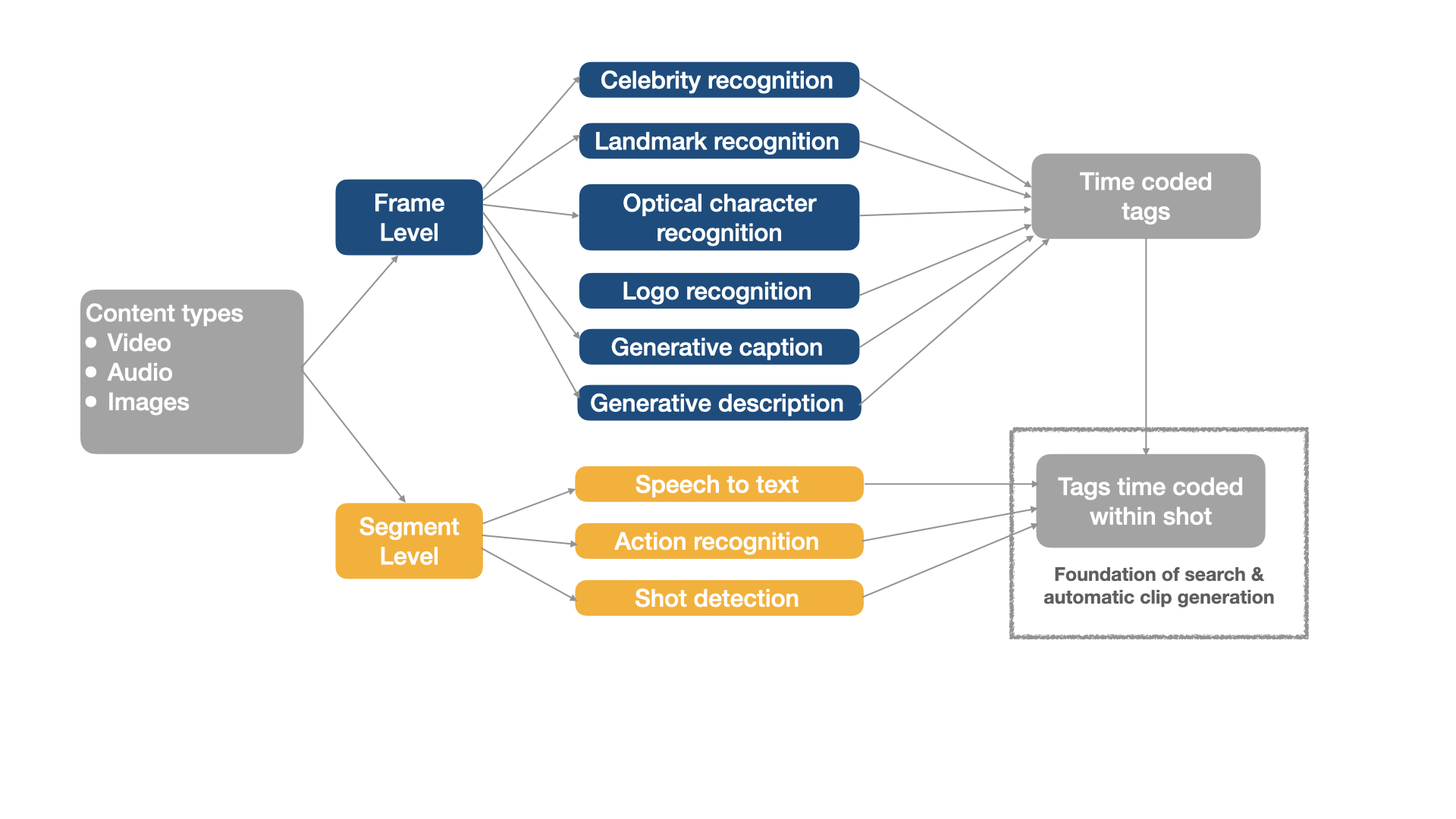

At Eluvio, we have developed the Eluvio Tagger, a multi-modality inference engine for universal video context understanding, including both frame-level and segment-level features, as shown in Fig. 1. Specifically, the frame features include celebrity, landmark, optical character recognition, logo, caption, and description, where we tag the sampled frames and obtain the frame-indexed tagger results. As for the segment-level features, we explore action and speech-to-text to enrich semantic concepts and topics. We also developed shot detection, where all other tags are aggregated based on their timestamps, to enable downstream tasks like clip search, video clipping, etc. In the inference engine, each feature/model consists of a single- or multi-stage deep neural network model trained from the ground up on either open-sourced or customized large-scale video/audio datasets.

Content Fabric Integration

The universal video context understanding has been deployed to the Content Fabric, as a native content tagging service. The service can be invoked either during content ingestion or afterwards using the tagger API. See here for its usage details.

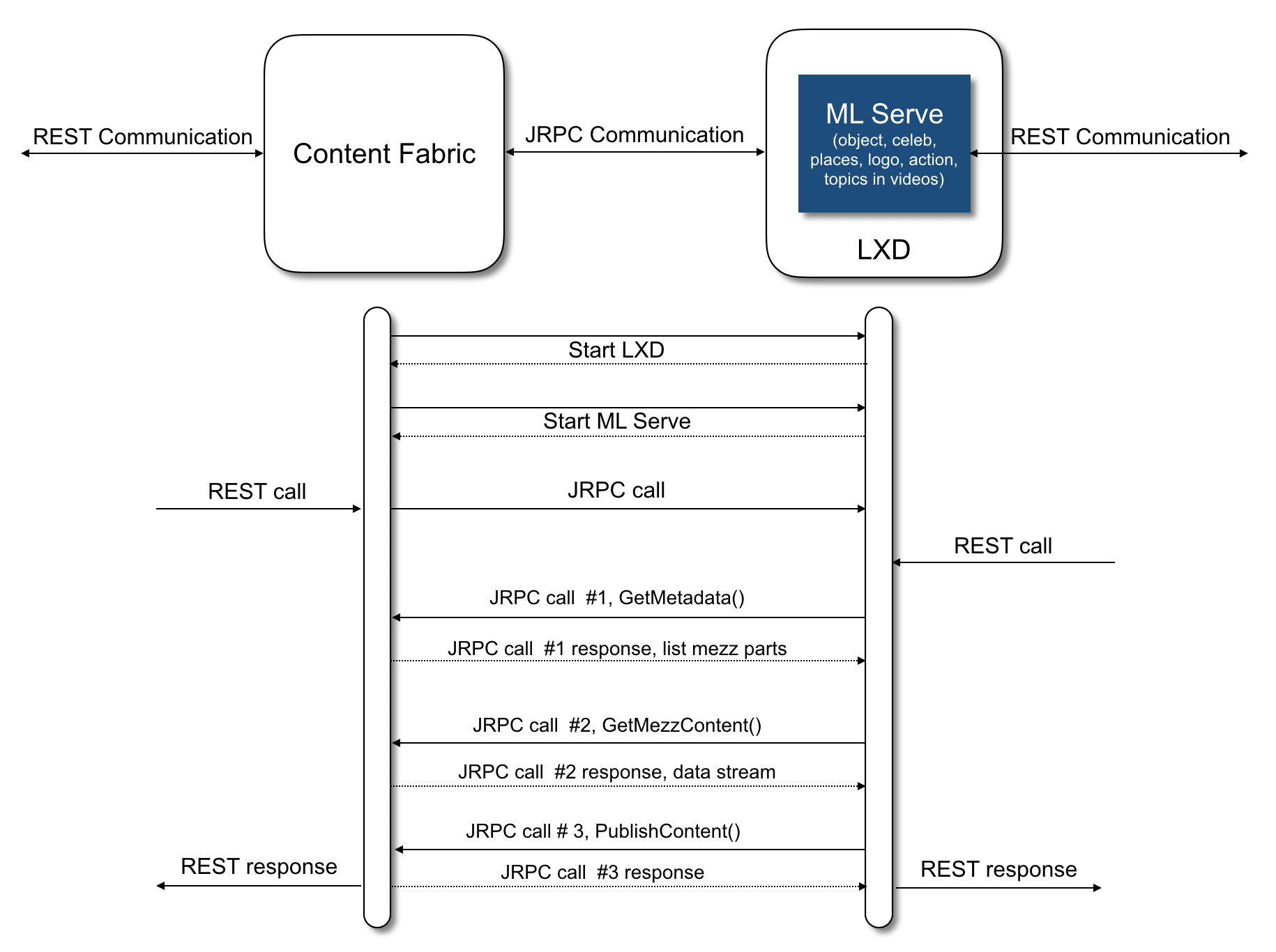

The ML inference engine written in Python is hosted on Linux containers (LXC) and communicates with the Content Fabric through JRPC channels. It’s exposed as a REST service. See Fig. 2. for workflow details.

The native tagging service on the Fabric allows content viewers to do just-in-time clip queries based on the video metadata. It enables dynamic and personalized video/clip recommendation. The just-in-time programmatic capabilities of the Fabric together with the tagging service allow content providers to create customized clips, e.g. scene/topic/activity based clipping service leveraging content and audience metadata.

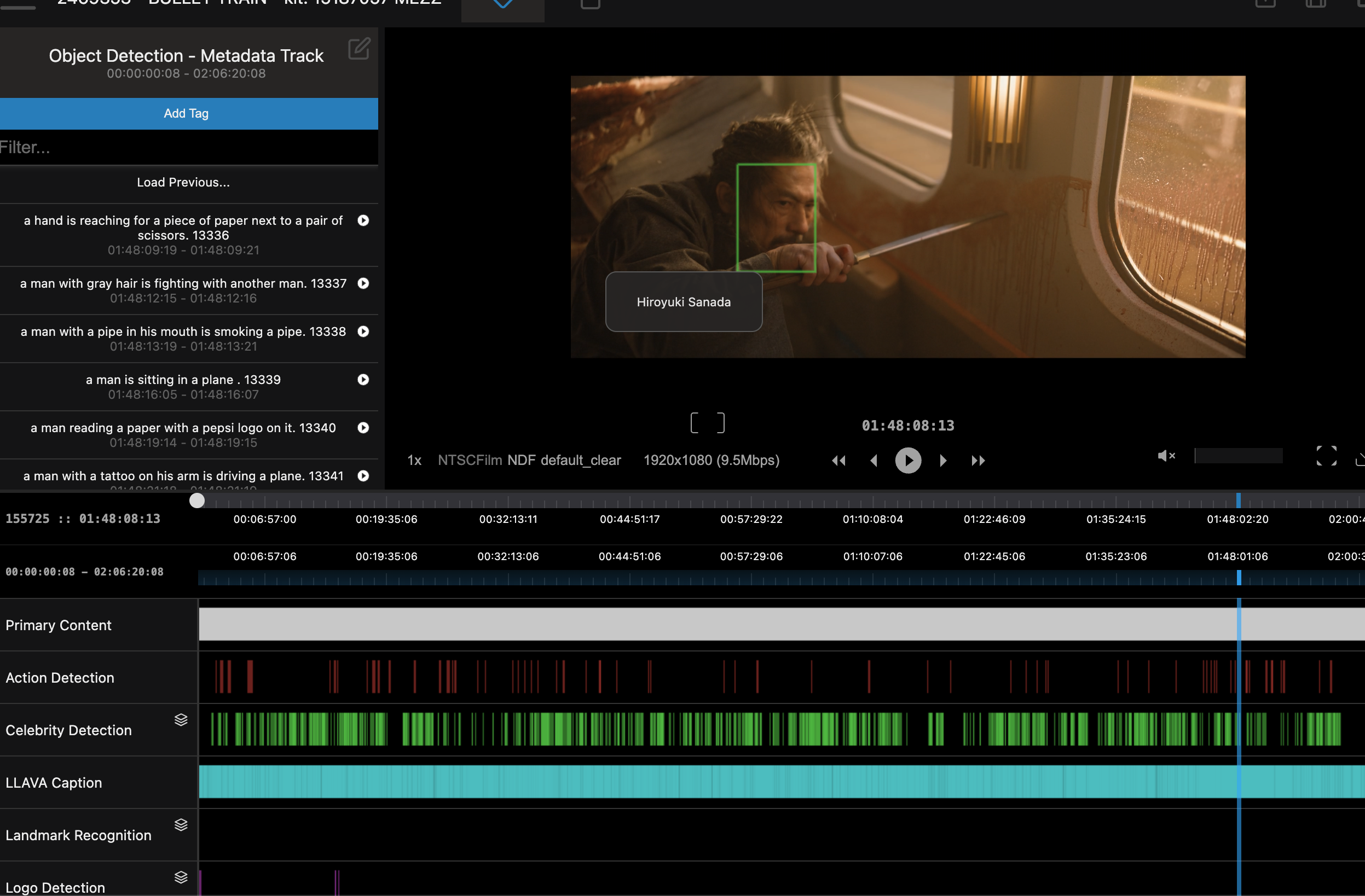

The tagging service could also be used to offer programmatic automation of many manual media curation tasks, including automatic identification of credits via OCR, thumbnail or “poster” generation from key frames, identification of key brands/sub-franchise or activities for advertising insertion, and automatic identification of localization requirements (e.g. banned scenes). Here is a query example of “fighting” scenes in the Bullet Train movie. Both generative caption and generative description tracks indicate relevant clips, which one can further do clipping and advertising insertion.

Tagger Data Model Explanation

Tagger results are stored as JSON files in the metadata associated with the tagged contents. Track files named video-tags-tracks-00xx.json store time-coded tags (as shown in Fig. 1.). They also contain tags time-coded within shots, i.e., the aggregated shot_tags used to create search index objects.

In overlay files (video-tags-overlay-00xx.json), tags are frame-indexed, storing frame-level tags such as celebrity, logo, OCR, landmark, generative caption, and generative description. Overlay files also include bounding box data for each model if applicable, as well as confidence scores returned by the model inference. The bounding box and confidence score are omitted in the track field.

Tagger File Structure

The shot_tags in the track file serve as the foundation for search and automatic clip generation. Both frame-level model results and segment-level results are merged and aggregated into shot_tags. The start_time and end_time (in millisecond units) of each shot are determined by the shot detection model.

Tagger results example - version 1

- Track files,

video-tags-tracks-00xx.json,

{

"version": 1,

"video_level_tags": {},

"metadata_tags": {

"action_detection": {

"label": "Action Detection",

"tags": [

{

"end_time": 816107,

"start_time": 814814,

"tags": { "sword fighting": 0.983 },

"text": ["sword fighting"]

},

{

"end_time": 867492,

"start_time": 866199,

"tags": { "motorcycling": 0.9722 },

"text": ["motorcycling"]

}, ...

]

},

"celebrity_detection": {

"label": "Celebrity Detection",

"tags": [

{

"end_time": 602644,

"start_time": 600934,

"text": ["Brian Tyree Henry"]

},

{ "end_time": 636469, "start_time": 636261, "text": ["Brad Pitt"] },

{

"end_time": 644227,

"start_time": 643017,

"text": ["Aaron Taylor-Johnson"]

},

{

"end_time": 1025066,

"start_time": 1024607,

"text": ["Hiroyuki Sanada"]

},

{ "end_time": 1035368, "start_time": 1035034, "text": ["Bad Bunny"] }, ...

]

},

"landmark_recognition": { "label": "Landmark Recognition", "tags": [] },

"logo_detection": {

"label": "Logo Detection",

"tags": [

{ "end_time": 852143, "start_time": 851017, "text": ["MercedesBenz"] },

{ "end_time": 871496, "start_time": 871120, "text": ["Audi"] }, ...

]

},

"object_detection": {

"label": "Object Detection",

"tags": [

{

"end_time": 608316,

"start_time": 608274,

"text": [

"a man in a white hat is standing in a train with a red suitcase behind him."

]

},

{

"end_time": 666708,

"start_time": 666666,

"text": [

"a woman with blonde hair is sitting in a train with a bottle of water on the window."

]

}, ...

]

},

"optical_character_recognition": {

"label": "Optical Character Recognition",

"tags": [

{ "end_time": 622539, "start_time": 622413, "text": ["NIPPON SPEE"] },

{ "end_time": 639055, "start_time": 638638, "text": ["SPEEDL"] }

]

},

"shot_detection": {

"label": "Shot Detection",

"tags": [

{

"end_time": 604521,

"start_time": 600600,

"tags": { "shot": 1 },

"text": ["shot"]

},

{

"end_time": 608942,

"start_time": 604562,

"tags": { "shot": 1 },

"text": ["shot"]

}, ...

]

},

"speech_to_text": {

"label": "Speech to Text",

"tags": [

{

"end_time": 631680,

"start_time": 601600,

"tags": {

"but if we're gonna stick with fruit we're not apple or orange so what's in this case are we doing this you know what's in the case money it's always money time drains are sophisticated now he's color and fruit sophisticated it's a cross hypodize with upper fruit they're adaptable what me you said six cars in economy save thirty passengers per car guessing two bags per se in terms to security yeah no fucking way i'm gonna find one": 1

},

"text": [

"but if we're gonna stick with fruit we're not apple or orange so what's in this case are we doing this you know what's in the case money it's always money time drains are sophisticated now he's color and fruit sophisticated it's a cross hypodize with upper fruit they're adaptable what me you said six cars in economy save thirty passengers per car guessing two bags per se in terms to security yeah no fucking way i'm gonna find one"

],

"wordpiece_timestamps": [

["but", 601799.9999880791],

["if", 601880.0000011921],

["we're", 601999.9999761581],

["gonna", 602119.9999809265],

["stick", 602359.9999904633],

...

["one", 631479.9991607666]

]

}

]

},

"llava_caption": {

"label": "LLAVA Caption",

"tags": [

{

"start_time": 602601,

"end_time": 602642,

"text": [

"The image shows a man with a distinctive hairstyle, featuring a large, curly afro. He is wearing a patterned jacket over a white shirt and a tie. The man appears to be in a public transportation setting, possibly a train or bus, as suggested by the rows of seats and the interior lighting. The man's expression is serious, and he is looking directly at the camera. The overall tone of the image is somewhat moody, with a color palette that includes cool blues and purples."

]

}

]

},

"shot_tags": {

"label": "Shot Tags",

"tags": [

{

"end_time": 637220,

"start_time": 635718,

"text": {

"Action Detection": [],

"Celebrity Detection": [

{

"end_time": 636469,

"start_time": 636261,

"text": ["Brad Pitt"]

}

],

"Landmark Recognition": [],

"Logo Detection": [],

"Object Detection": [

{

"end_time": 635927,

"start_time": 635885,

"text": [

"a man with a hat and glasses is looking through a window."

]

},

{

"end_time": 637178,

"start_time": 637136,

"text": [

"a man is standing in front of a window with a black background."

]

}

],

"Optical Character Recognition": [],

"Speech to Text": [

{

"end_time": 637220,

"start_time": 635718,

"text": ["holy shit box"]

}

],

"LLAVA Caption": [

{

"start_time": 635718,

"end_time": 635718,

"text": [

"The image shows a person standing in a dimly lit room with a focus on the person's face. The person is wearing glasses, a white cap, and a dark jacket. They appear to be looking at something in their hands, which could be a small object or a piece of paper. The room has a dark ambiance, and there are what seem to be seats or benches in the background. The lighting is uneven, with some areas of the room being brighter than others. The style of the image suggests it could be from a film or a photograph with a cinematic quality."

]

},

{

"start_time": 636635,

"end_time": 636635,

"text": [

"The image shows a person standing in a dimly lit room with a dark ambiance. The person appears to be wearing glasses and a coat, and they are looking directly at the camera with a focused expression. The room has a low ceiling and is filled with various pieces of luggage or equipment, which are partially visible and appear to be in a state of disarray. The lighting is uneven, with some areas of the room being more illuminated than others, creating a sense of depth and contrast. The overall mood of the image is somewhat mysterious or intense, given the darkness and the person's serious demeanor."

]

}

]

},

"Video LLAVA Caption": [

{

"start_time": 635718,

"end_time": 637220,

"text": "The video shows a man sitting at a table and playing the harmonica. He is wearing a hat and glasses and is seen playing the instrument in different positions. The video also features a woman sitting in front of him.</s>"

}

]

}

]

}

}

}

- Overlay files,

video-tags-overlay-00xx.json,

{

"version": 1,

"overlay_tags": {

"frames_per_sec": 23.976023976023978,

"frame_level_tags": {

"14400": {

"object_detection": {

"tags": [

{

"text": "a man with blonde hair is sitting in a train .",

"confidence": 1.0,

"box": { "x1": 0.05, "y1": 0.05, "x2": 0.95, "y2": 0.95 }

}

]

},

"celebrity_detection": {},

"logo_detection": {},

"optical_character_recognition": {},

"landmark_recognition": {},

"timestamp_sec": 600600

},

"14424": {

"object_detection": {},

"celebrity_detection": {

"tags": [

{

"text": "Brian Tyree Henry",

"confidence": 0.4277,

"box": { "x1": 0.4348, "y1": 0.3123, "x2": 0.5598, "y2": 0.596 }

}

]

},

"logo_detection": {},

"optical_character_recognition": {},

"landmark_recognition": {},

"timestamp_sec": 601601

},

"14432": {

"object_detection": {},

"celebrity_detection": {

"tags": [

{

"text": "Brian Tyree Henry",

"confidence": 0.4582,

"box": { "x1": 0.431, "y1": 0.3248, "x2": 0.5653, "y2": 0.6271 }

}

]

},

"logo_detection": {},

"optical_character_recognition": {},

"landmark_recognition": {},

"timestamp_sec": 601935

},

"14440": {

"object_detection": {},

"celebrity_detection": {

"tags": [

{

"text": "Brian Tyree Henry",

"confidence": 0.4592,

"box": { "x1": 0.4379, "y1": 0.3323, "x2": 0.5743, "y2": 0.6319 }

}

]

},

"logo_detection": {},

"optical_character_recognition": {},

"landmark_recognition": {},

"timestamp_sec": 602268

},

"14448": {

"object_detection": {},

"celebrity_detection": {

"tags": [

{

"text": "Brian Tyree Henry",

"confidence": 0.4771,

"box": { "x1": 0.4394, "y1": 0.3262, "x2": 0.5755, "y2": 0.629 }

}

]

},

"logo_detection": {},

"optical_character_recognition": {},

"landmark_recognition": {},

"timestamp_sec": 602602

},

"14455": {

"object_detection": {},

"celebrity_detection": {},

"logo_detection": {},

"optical_character_recognition": {},

"landmark_recognition": {},

"timestamp_sec": 602894

},

"14463": {

"object_detection": {

"tags": [

{

"text": "a man with silver and black hair is sitting in a train.",

"confidence": 1.0,

"box": { "x1": 0.05, "y1": 0.05, "x2": 0.95, "y2": 0.95 }

}

]

},

"celebrity_detection": {

"tags": [

{

"text": "Brian Tyree Henry",

"confidence": 0.4303,

"box": { "x1": 0.4432, "y1": 0.323, "x2": 0.5766, "y2": 0.6355 }

}

]

},

"logo_detection": {},

"optical_character_recognition": {},

"landmark_recognition": {},

"timestamp_sec": 603228

}, ...

}

}

}

Download Tagger Results

Download tag files in Fabric browser

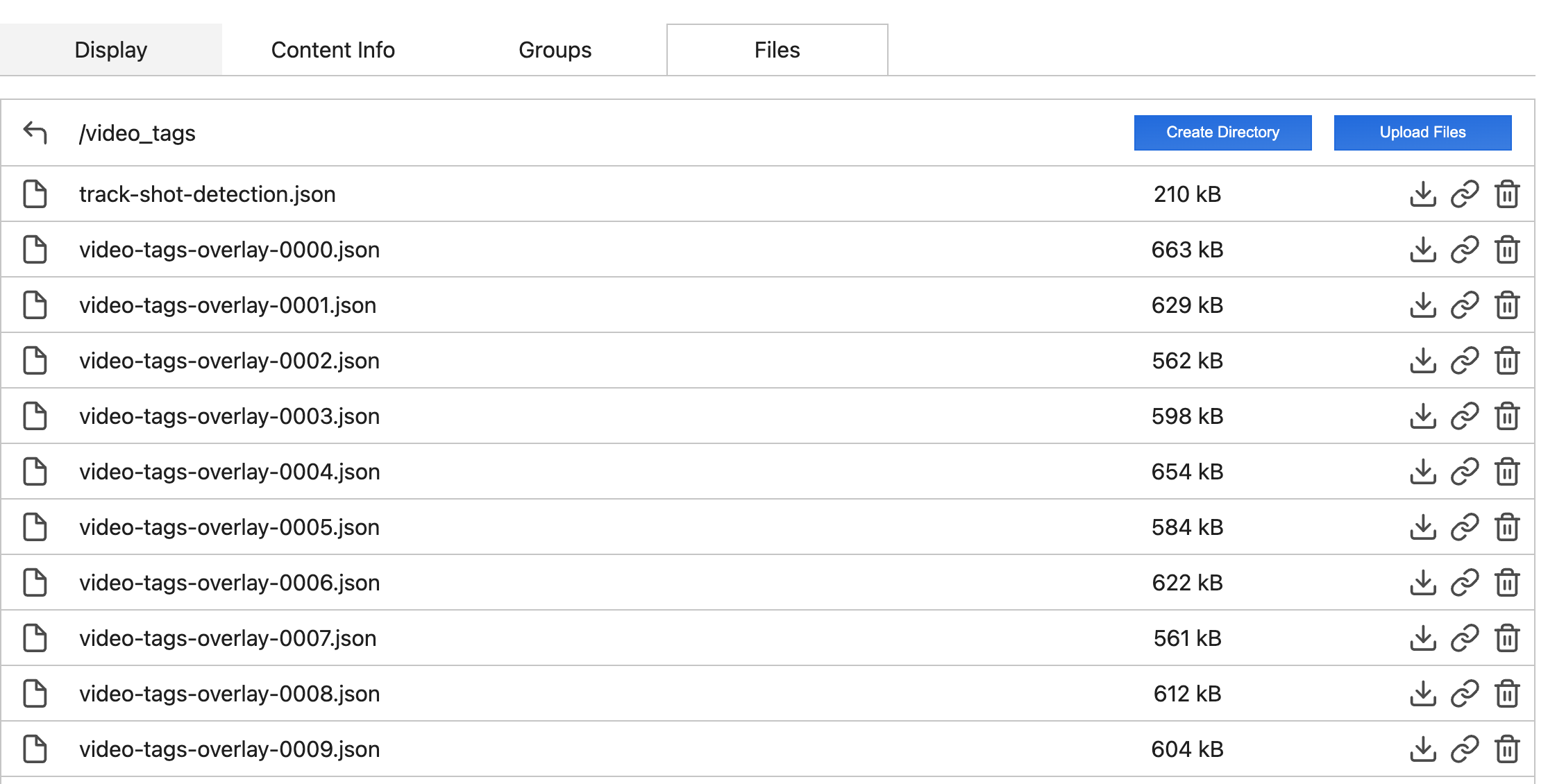

Tag files are stored on fabric under files/video_tags for each content object, and can be downloaded in the UI as shown in Fig. 4..

Download tag files using Eluvio CLI tool - elv

- Download a file or directory to the given destination

Usage:

elv files download <library id> <file> [<dest>] [flags]

Example:

elv files download --library ilib2dh1ywazUPz4caGCkDhtgGRyztTi --qid iq__2zJg7ix55XUry8q8iZTXg9WYoMVB /video_tags/ ~/Users/Documents --decryption-mode none

- Download all tags given a search index

Usage:

elv content meta get <content id, hash or write token> [path] [flags]

Example:

# search_index=iq__4Dezn5i6EZs4vFCD4qE8Xc4QbXsf

# library_id=ilib3CuSKoWVuyN584VzraSiaQXGgSvi

elv content meta get iq__4Dezn5i6EZs4vFCD4qE8Xc4QbXsf indexer/permissions/sorted_ids --qid iq__4Dezn5i6EZs4vFCD4qE8Xc4QbXsf --library ilib3CuSKoWVuyN584VzraSiaQXGgSvi 1> iqs.txt

cat iqs.txt | xargs -I {} elv files download --qid {} /video_tags ~/Users/Documents/video_tags/{} --progress --decryption-mode none